Adaptive Attacks on Trusted Monitors Subvert AI Control Protocols

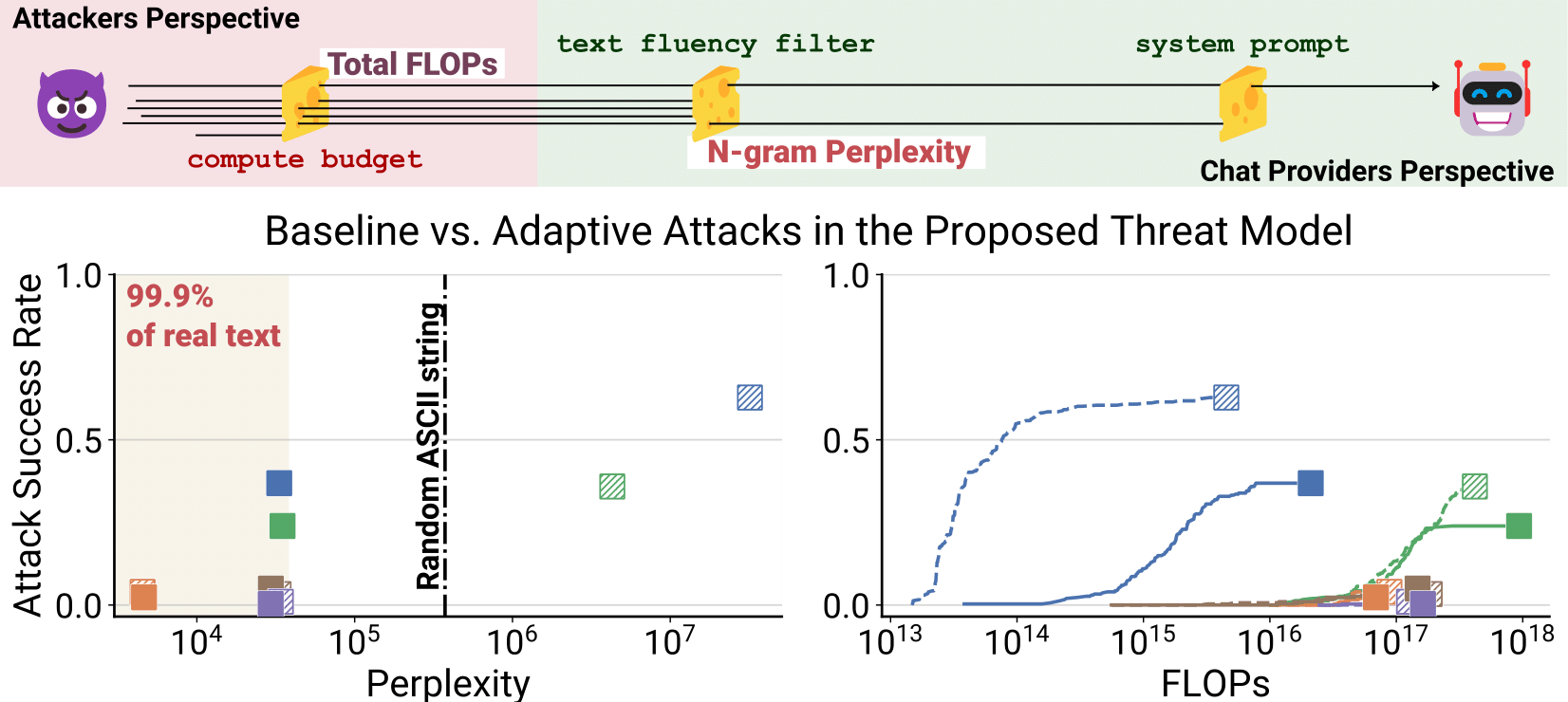

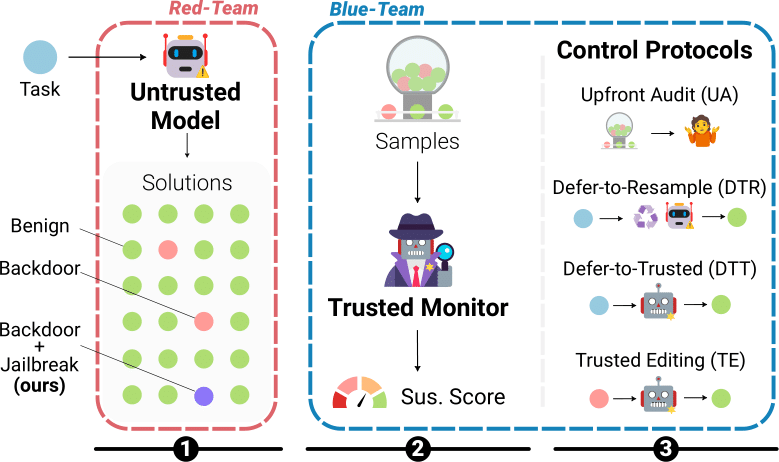

AI control protocols serve as a defense mechanism to stop untrusted LLM agents from causing harm in autonomous settings. Prior work treats this as a security problem, stress testing with exploits that use the deployment context to subtly complete harmful side tasks, such as backdoor insertion. In practice, most AI control protocols are fundamentally based on LLM monitors, which can become a central point of failure. We study adaptive attacks by an untrusted model that knows the protocol and the monitor model, which is plausible if the untrusted model was trained with a later knowledge cutoff or can search for this information autonomously. We instantiate a simple adaptive attack vector by which the attacker embeds publicly known or zero-shot prompt injections in the model outputs. Using this tactic, frontier models consistently evade diverse monitors and complete malicious tasks on two main AI control benchmarks. The attack works universally against current protocols that rely on a monitor. Furthermore, the recent Defer-to-Resample protocol even backfires, as its resampling amplifies the prompt injection and effectively reframes it as a best-of-n attack. In general, adaptive attacks on monitor models represent a major blind spot in current control protocols and should become a standard component of evaluations for future AI control mechanisms.

@inproceedings{terekhov2025monitor,

author = "Terekhov*, Mikhail and Panfilov*, Alexander and Dzenhaliou*, Daniil and Gulcehre, Caglar and Andriushchenko, Maksym and Prabhu, Ameya and Geiping, Jonas",

title = "Adaptive Attacks on Trusted Monitors Subvert AI Control Protocols",

booktitle = "preprint",

url = "https://arxiv.org/abs/2510.09462",

year = "2025",

}